The AI Your Competitors Can’t Buy

Exec Summary

THE PROBLEM

Every large enterprise is investing in AI. Most are deploying the same tools, from the same vendors, achieving the same incremental efficiency gains. By 2027, these capabilities will be commodity. The result: AI spend that buys parity, not advantage.

THE INSIGHT

A qualitative shift occurs between AI that automates workflows and AI that deliberates. Below that threshold, AI makes your people more efficient. Above it, AI makes your organisation more intelligent — generating what we call Intelligence Capital: institutional knowledge that compounds with every decision, persists independently of any individual, and cannot be replicated by purchasing the same technology later.

THE FRAMEWORK

The Agentic AI Maturity Model defines three tiers of investment. Tier 1 (AI Assistants) is table stakes. Tier 2 (Agentic Automation) delivers essential productivity gains and funds the programme. Tier 3 (Agentic Intelligence) generates Intelligence Capital through genuine multi-agent deliberation, transparent reasoning capture, and cross-functional coordination. The strategic imperative is to build Tier 2 and Tier 3 in parallel — so that automation generates Intelligence Capital from day one. First-mover advantage is structural: organisations that begin earlier accumulate an advantage that late-movers cannot close, because they lack the compounding history.

THE BASIS

This article is not theory. It draws on our direct experience designing and deploying advanced agentic AI systems — including Level 4 agentic teams — within regulated sectors over the past two years. The frameworks described here reflect what we have seen work, and fail, in production.

The Parity Problem

Every large enterprise is investing in AI. Most are deploying the same tools, from the same vendors, achieving the same incremental efficiency gains. Copilots help people draft emails faster. Chatbots deflect routine enquiries. Robotic process automation handles data entry. These are useful investments. They are also being replicated simultaneously across every competitor.

By 2027, these capabilities will be commodity. The subscription fee for an AI assistant will be a rounding error in the technology budget. Every organisation will have one. Your AI spend will have bought parity, not advantage.

This is the central strategic question for enterprise leaders today: is your AI investment building something a competitor cannot replicate by purchasing the same technology six months later?

For most organisations, the honest answer is no. And that answer points to a fundamentally different kind of AI investment — one that doesn’t just automate but accumulates. One whose value doesn’t depreciate with the next vendor release but appreciates with every decision the organisation makes.

We call this Intelligence Capital — a term coined by Professor David Shrier (Imperial College London), here. The framework I develop below builds on that foundation. [1]

From AI Tools to Intelligence Capital

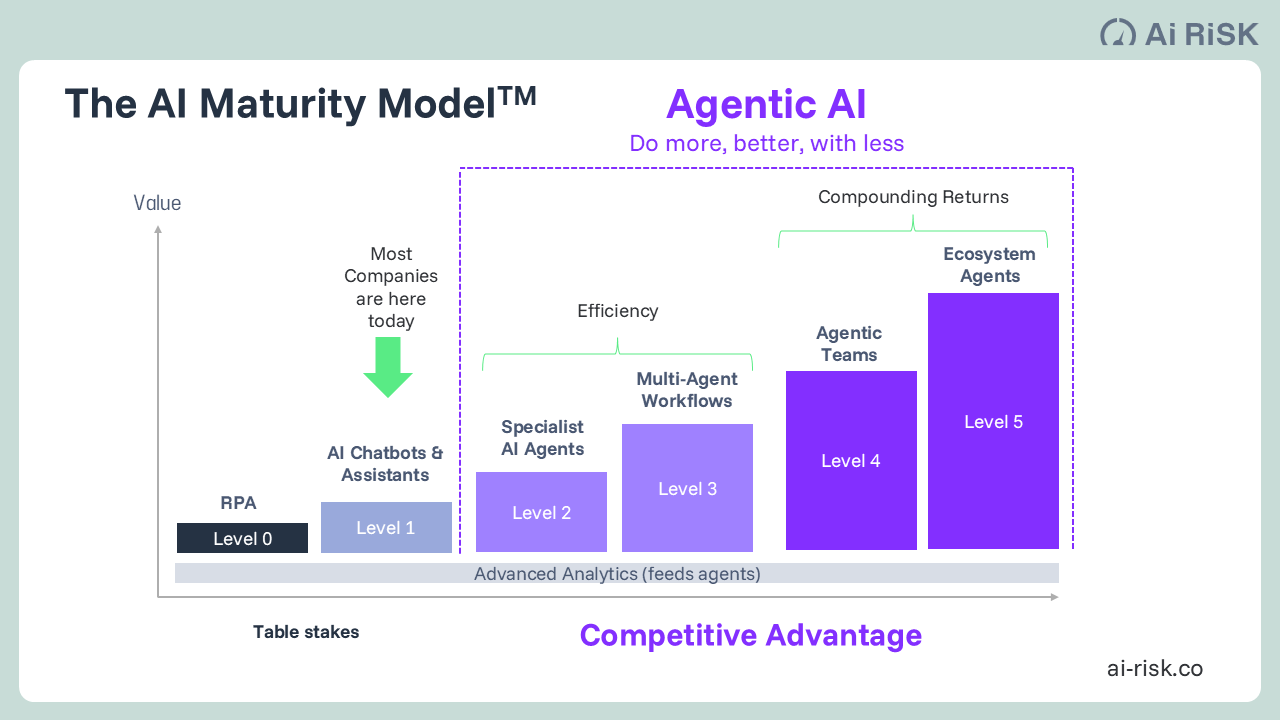

The Agentic AI Maturity Model describes a progression from Level 0 to Level 5 — from simple rule-based automation to ecosystem-wide agent networks. But the most important transition in the model is not between any two adjacent levels. It is the qualitative shift that occurs between Level 3 and Level 4: the shift from AI that processes to AI that deliberates.

Below that threshold, AI makes your people more efficient. Above it, AI makes your organisation more intelligent. That distinction — between a productivity tool and a capital-generating asset — is the organising principle of everything that follows.

The word “capital” is deliberate. Enterprises already manage several forms of capital, each with distinct characteristics. Financial capital funds the enterprise but earns only market returns and is available to any competitor with access to the same markets.

Human capital provides expertise and judgment but depreciates when people retire, move to competitors, or are simply unavailable — it is the most valuable and most fragile asset most knowledge-intensive organisations possess. Data capital provides the raw material for decisions but is increasingly commoditised and, on its own, inert.

Intelligence Capital is distinct from all three.

It captures the judgment applied to data — not the data itself but the reasoning, expertise, and cross-functional insight that transforms data into decisions. Unlike human capital, it never leaves. Unlike financial capital, it cannot be acquired by a competitor. Unlike data capital, it appreciates with use. It is, in economic terms, a new factor of production.

“Intelligence Capital

Institutional intelligence — decision rationale, expert judgment patterns, cross-functional insight — captured, encoded, and compounded by agentic AI systems. Unlike conventional technology, it appreciates with use. Unlike individual expertise, it never leaves. And unlike the AI your competitors are buying, it cannot be replicated by purchasing the same tools.”

Intelligence Capital is not a metaphor. It describes a concrete economic phenomenon: when AI systems are designed to deliberate rather than merely process, they generate institutional knowledge that persists independently of any individual employee, compounds with every subsequent decision, and creates an advantage that widens over time rather than eroding.

The organisation that begins generating IC today will have years of accumulated institutional intelligence that a late-mover cannot close, because the late-mover lacks the compounding history — regardless of which technology they deploy.

Three Tiers of AI Investment

Not all AI investment is equal. The maturity model defines six levels, but for strategic decision-making, what matters is understanding three fundamentally different tiers of investment — each with distinct economics, distinct competitive dynamics, and a distinct relationship to Intelligence Capital.

Tier 1: AI Assistants (Levels 0–1)

This tier encompasses the rule-based automation (RPA) that has been deployed for a decade, plus the generative AI tools — copilots, chatbots, and assistants — that have proliferated since 2023. The common characteristic: your people ask questions, get answers, and do the work themselves. The human remains the decision-maker, the bottleneck, and the repository of expertise.

Level 0 (Scripted Automation) is hard-coded rules executing individual steps with no autonomy. Legacy bots, basic OCR workflows. Value depreciates as processes change. Being actively replaced across the market.

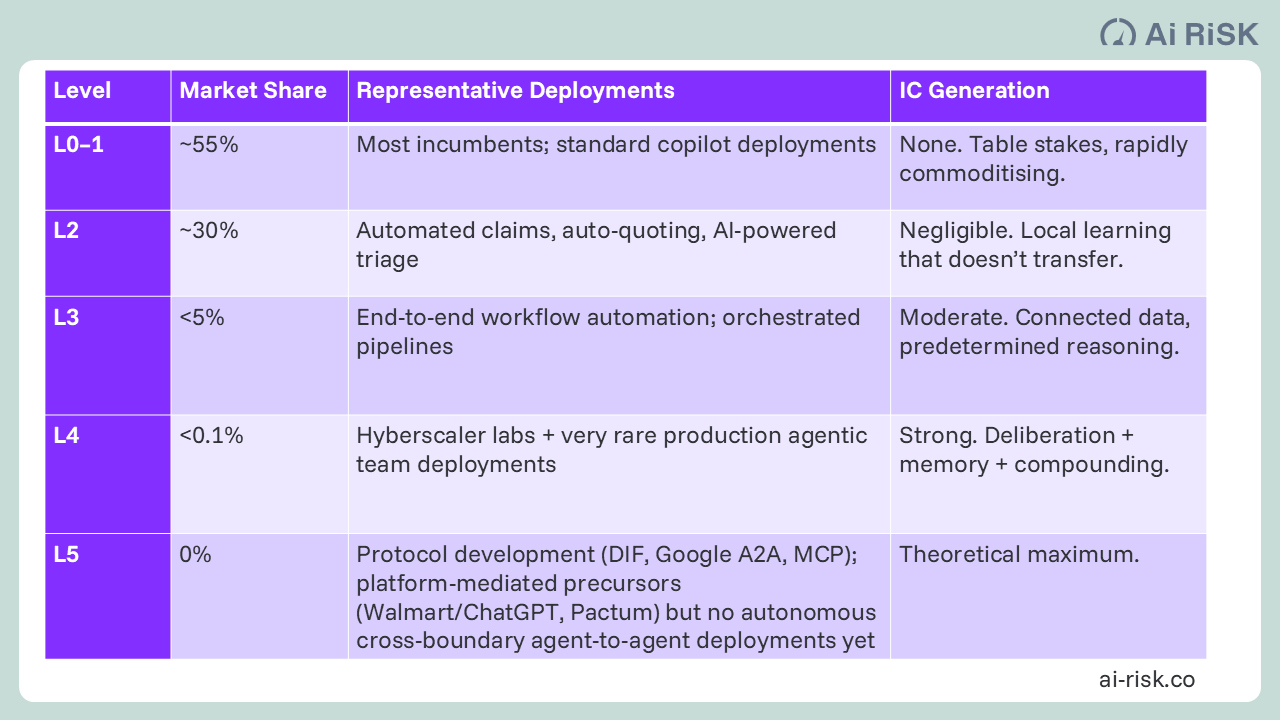

Level 1 (AI Chatbots) is a single language model agent that drafts, triages, or retrieves information, with mandatory human approval for every significant action. This is where approximately 55% of current enterprise AI deployments sit. Consumer tools like ChatGPT, Microsoft Copilot, and Claude deliver L1 capability for $20–$100 per user per month. When your competitors can buy the same capability from multiple vendors at a standard subscription fee, it is table stakes, not competitive advantage.

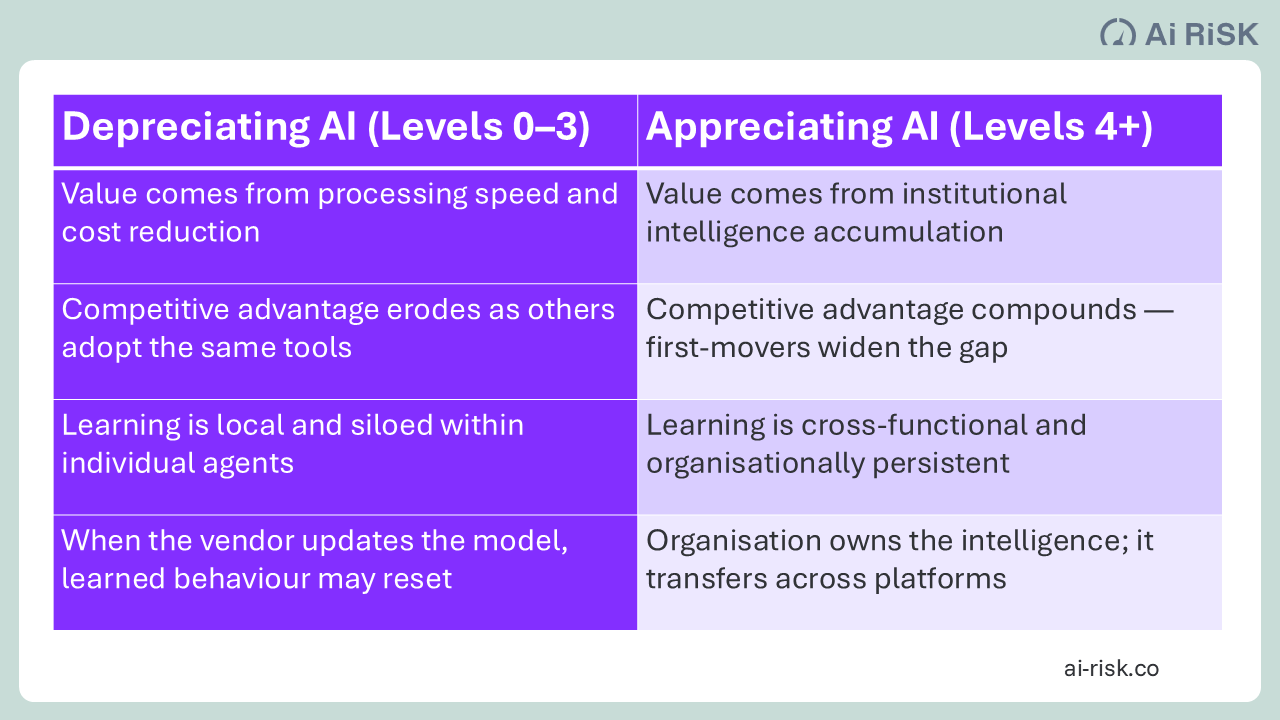

IC Profile: None. The AI suggests; the human decides; nothing of lasting institutional value is captured. Expertise remains in the individual’s head and walks out the door when they leave.

Tier 2: Agentic Automation (Levels 2–3)

This is the critical productivity layer: specialist AI agents and multi-agent workflows that complete bounded tasks autonomously — done by AI, not advised by AI. This tier delivers the efficiency gains that fund the broader transformation programme and, crucially, creates the structured data environment that makes higher-level intelligence possible.

Level 2 (Specialist AI Agents) deploys a single domain-specific agent that both decides and executes for routine cases, escalating edge cases to humans. Production examples span industries: two-second insurance claims payouts, automated contract review in legal, real-time credit decisioning in banking, and AI-generated diagnostic pre-screening in healthcare. Approximately 30% of the current market.

Level 3 (Multi-Agent Workflows) orchestrates several specialist agents in preset pipelines — an insurance submission flows through pricing, issuance, and servicing; a loan application moves from intake through credit assessment, compliance checks, and approval; a patient referral routes through triage, scheduling, and care coordination. Exceptions route to humans or rules engines. Less than 5% of the current market, but where leading enterprise AI investment is starting to concentrate.

IC Profile: Low to moderate. L2 agents learn to do their specific task better, but that learning is local, siloed, and non-transferable. A competitor who buys the same agent gets the same capability on day one. L3 workflows connect information across process steps, which is more powerful than isolated agents, but the pipeline is predetermined — it doesn’t question whether it’s asking the right questions. It produces throughput, not judgment.

The GM Trap

Level 3 is where the greatest risk of misallocated investment concentrates. The temptation is to deploy powerful technology to automate existing processes faster — the General Motors approach of the 1980s, which spent billions on robotics to do the same thing with fewer people. Toyota's approach was different: redesign how the organisation thinks, learns, and improves. The result was not just efficiency but institutional intelligence that compounded for decades — enabling Toyota to produce higher-quality vehicles at lower cost than GM, despite GM outspending them on automation throughout the 1980s and 1990s. Today Toyota is worth roughly four times GM, a reversal that would have seemed absurd when GM was the world's most valuable automaker. L3 is efficient. It is not intelligent. The question is whether your AI investment follows GM or Toyota. [2]

Tier 2 is essential. It funds the programme, builds the data infrastructure, and — importantly — these are the agents that will eventually represent your organisation in cross-boundary commerce. But Tier 2 alone is a race to parity. Every competitor will have equivalent automation within 24 months.

Tier 3: Agentic Intelligence (Levels 4–5)

This is where the economics change fundamentally. This is where Intelligence Capital is generated.

Level 4 (Agentic Teams) introduces something that has never previously existed in automated systems: genuine deliberation. A small group of role-based AI agents deliberates together on complex, unstructured problems — surfacing divergent views, weighing evidence, and jointly producing a reasoned recommendation with a transparent audit trail. The pattern mirrors a human committee rather than an assembly line. Human professionals participate as peers: they can accept, challenge, or overrule decisions but are not required for every run.

In one of our live production deployments in insurance, L4 Agentic Teams achieved a 70% reduction in required human staff while increasing customer satisfaction by 25% and improving financial performance by 11 percentage points. Human employees reported higher job satisfaction and requested AI “clones” to support their work. The pattern — fewer people, better outcomes, higher morale — is replicable across any knowledge-intensive domain.

The critical difference: when an L4 team convenes on a complex problem, the output is not just a recommendation. It is a reasoned opinion with a complete record of how that opinion was formed — arguments considered, evidence weighted, disagreements surfaced, and why the final recommendation prevailed. That deliberation record is where Intelligence Capital actually gets generated. And when a senior professional reviews the analysis and adjusts it, the system captures why they adjusted — transferring expertise from a depreciating individual asset to an appreciating institutional one.

Level 5 (Ecosystem Agents) extends agentic capability across organisational boundaries. External agents query yours for terms and availability.

L5 remains early-stage in production, but recent cross-industry research reveals a structural challenge that most enterprises have not yet recognised. When your agents negotiate with a counterparty's agents — broker to insurer, supplier to buyer, provider to payer — both sides require cryptographically verifiable identity, delegated authority, and immutable audit trails.

The protocols currently underpinning most agent-to-agent infrastructure, including Google's A2A protocol and Anthropic's Model Context Protocol, rely on authentication mechanisms designed for humans delegating access to applications, not for autonomous agents operating across trust boundaries.

Security architects across insurance, healthcare, financial services, and supply chain management are independently reaching the same conclusion: the foundation on which most enterprises are building agent infrastructure cannot support cross-boundary trust at scale.

This matters for Intelligence Capital strategy because L5 is where institutional intelligence becomes market intelligence — and market intelligence requires trusted interaction with agents you do not control. Organisations building L4 capability now should architect their agent identity and governance frameworks with cross-boundary trust in mind.

Retrofitting trust infrastructure onto agents designed for internal use is significantly more expensive than designing it in from the start. L4 readiness remains the natural foundation for L5 capability, but only if the identity, delegation, and audit architecture is built to extend beyond the enterprise boundary.

IC Profile: Strong (L4) to transformative (L5). This is where Intelligence Capital is generated: institutional intelligence from L4 deliberation, market intelligence from L5 ecosystem interaction. Together, they create an advantage that compounds over time and that competitors cannot replicate by purchasing the same technology.

What Makes Level 4 a Capital-Generating Asset

Not every L4 implementation generates Intelligence Capital. For the system to produce genuine institutional intelligence that compounds, six characteristics must be designed in from the start. Without any one of them, you get a clever system but not a capital-generating one.

Genuine multi-perspective deliberation. Agents must debate, not simply execute in parallel and merge results. Distinct specialist personas examine the same problem from genuinely different angles. The test: can the system produce a minority opinion that challenges consensus? If it cannot, it is a pipeline dressed as a team.

Transparent reasoning capture. Every deliberation produces a complete record of how the output was reached: arguments considered, evidence weighted, disagreements surfaced, and why the final recommendation prevailed. This is the raw material of IC. In regulated industries, it delivers explainability as a natural byproduct rather than a separate compliance burden.

Institutional memory that compounds. The system retains deliberation history and uses it to inform future deliberations. A complex case today draws on how analogous problems were analysed previously — not just what was decided but how reasoning evolved. A coverage dispute draws on previous disputes. A credit exception draws on previous exceptions. A diagnostic puzzle draws on previous puzzles. Every deliberation builds on every previous one.

Human-AI integration that captures expertise. The system elicits and encodes human judgment, not merely requests sign-off. When a professional reviews L4 analysis and adjusts it, the system captures why — transferring expertise that would otherwise depreciate when that individual retires, is promoted, or moves to a competitor. Digital twins of senior experts are a key mechanism: AI agents that encode specific individuals’ judgment patterns and make them available to the whole organisation permanently.

Cross-functional coordination. L4 in a single function creates local intelligence. The step-change occurs when L4 deliberations in one domain connect to and enrich another. In insurance, claims intelligence feeds underwriting decisions. In banking, credit monitoring intelligence improves origination models. In healthcare, treatment outcome data sharpens diagnostic protocols. In every case, portfolio-level insights shape both sides. This cross-functional coordination — what we call the Coordination Layer — transforms individual IC generators into an IC engine.

Self-improvement mechanisms. The system conducts retrospectives, identifies where reasoning failed, and adjusts. Meta-learning distinguishes an IC generator from a static expert system. It is the mechanism by which the system’s rate of improvement accelerates rather than plateaus — each cycle of deliberation making the next cycle more valuable.

The Economics of Intelligence Capital

The maturity model is not merely a capability taxonomy. It describes a fundamental economic transition in how AI creates value. Understanding this transition is essential for making sound investment decisions.

Three Value Dimensions

AI creates value along three dimensions. Each builds on the previous one, and the three together are not additive — they are compounding.

Productivity (doing cheaper). Immediate efficiency gains from automation — the routine work that currently consumes the majority of professional time. Every maturity level delivers this. Measurable ROI within months. Necessary, but not sufficient — and the dimension most vulnerable to competitive replication. When your competitor deploys the same L2 agent, your efficiency advantage disappears.

Operational Capacity (doing more). Growth decoupled from headcount. When operational capacity is no longer constrained by the number of people you can hire and train, lines of business that were uneconomical to serve become viable. Markets and segments where the cost of analysis, servicing, or decision-making has historically exceeded the revenue available are suddenly accessible. This is where AI stops reducing costs and starts growing the top line.

Coordination (doing better). Real-time intelligence flowing across functions. Feedback loops between operational domains that improve decision quality cycle after cycle. Institutional memory that never retires. And as ecosystem agents mature, agent-to-agent interactions generating market intelligence that deepens your competitive position. This is where the advantage becomes structural — because what you have built is not a faster process but an organisational intelligence that competitors cannot replicate by purchasing.

Each dimension enables the next. Productivity gains fund capacity expansion. Capacity generates the decision volume that makes coordination valuable. Coordination multiplies the returns on both. Intelligence Capital emerges from the intersection of all three dimensions working together.

Depreciating vs. Appreciating AI

The critical economic distinction is between AI investments that depreciate and those that appreciate.

The compounding dynamic is the critical insight. When an organisation begins generating Intelligence Capital, the system’s rate of improvement accelerates rather than plateaus. Each cycle of deliberation makes the next cycle more valuable, because each cycle adds to the institutional memory that informs subsequent decisions. First-mover advantage in IC formation is therefore structural: organisations that begin earlier accumulate an advantage that late-movers cannot close simply by deploying the same technology, because they lack the compounding history.

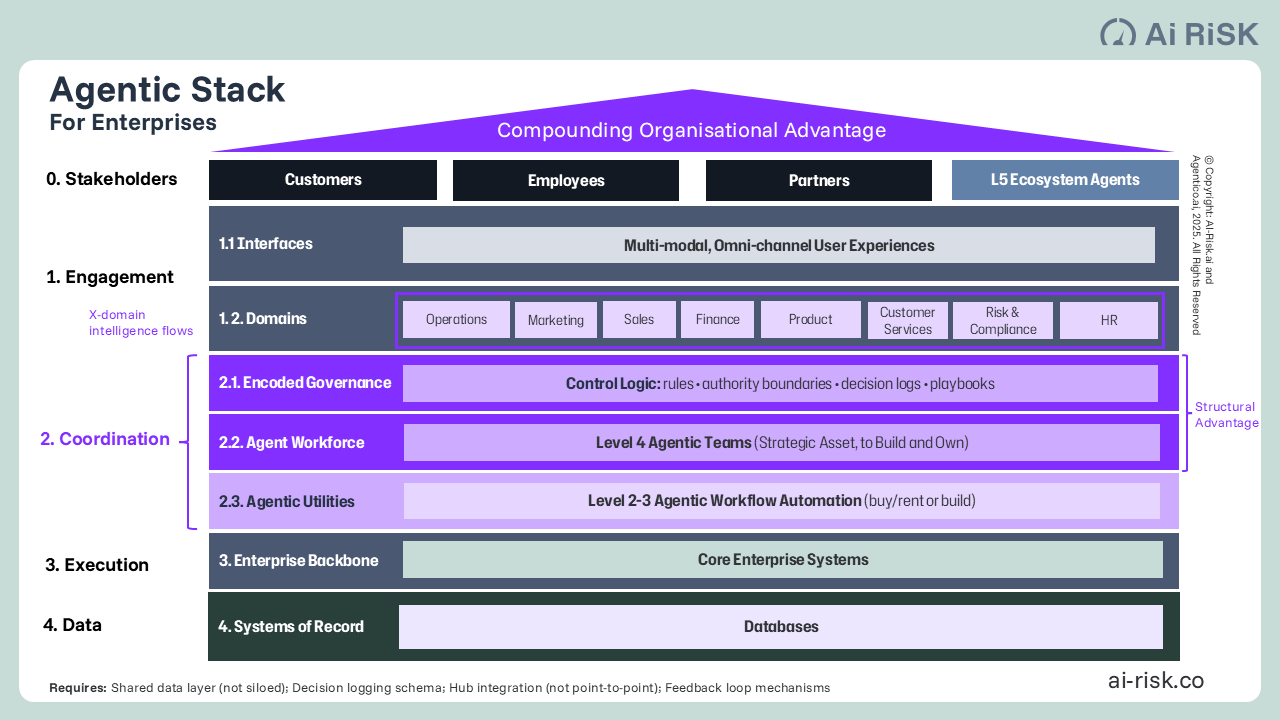

The Missing Layer: From ‘Human Middleware’ to Coordination Intelligence

In most enterprises today, coordination between functions happens through what might be called “human middleware” — email, meetings, institutional memory held in individuals’ heads, and expensive professionals acting as integration software. A senior professional who spots a pattern in one domain and mentions it to a colleague in another over lunch. A portfolio manager who notices risk accumulation and raises it at the quarterly review. A CFO who connects operational trends to pricing strategy because she has been in the business for twenty years.

This coordination is valuable. It is also fragile, slow, dependent on specific individuals, and impossible to scale. When the experienced professional leaves, the coordination capability leaves with them. When the organisation grows, the number of coordination pathways grows exponentially but the human capacity to maintain them does not.

The maturity model’s most important architectural concept is the Coordination Layer — a new structural layer in the enterprise architecture that replaces human middleware with encoded, scalable infrastructure that learns. This layer sits between client-facing engagement systems and the enterprise backbone. It does not exist today in most organisations. Its absence is why automation in one function fails to improve performance in another.

The Coordination Layer comprises three integrated components. First, agentic utilities: the L2–3 specialist agents and multi-agent workflows that automate bounded tasks and provide the structured data that higher layers operate on. Second, the agent workforce: L4 Agentic Teams that deliberate on complex problems, capture reasoning, and generate Intelligence Capital. Third, encoded governance: the control logic, authority boundaries, decision logs, and playbooks that ensure agents operate within risk appetite and compliance requirements — woven into the architecture so that governance is a natural byproduct of operation rather than a separate compliance burden.

Together, these components create the mechanism by which intelligence generated in one domain enriches every other. To make this concrete with an example from insurance: the claims system identifies a recurring dispute pattern arising from ambiguous contract wording. In a traditional operation, this insight lives in a handler’s head.

With the Coordination Layer, it is detected systematically, flagged to the underwriting team with supporting evidence, and incorporated into the next renewal cycle. The value originates in one domain but is realised in another. Neither function could produce this alone. The same dynamic applies in any knowledge-intensive enterprise — customer service intelligence improving product design, credit monitoring sharpening origination, clinical outcomes refining diagnostic protocols.

Without the Coordination Layer, each function improves in isolation. With it, the organisation builds an intelligence engine whose value accelerates across functions. This cross-domain compounding transforms local automation into structural competitive advantage.

The Strategic Playbook

The Portfolio Approach

Rather than progressing sequentially through the levels, our model recommends a portfolio approach: deploy Tier 2 and Tier 3 initiatives in parallel, with deliberate time-horizon balancing.

Tier 2 agentic systems deliver quick wins and fund the programme. Target processes with clear automation potential and measurable ROI. Accept that these capabilities will commoditise — extract value now while they still differentiate. Equally important, Tier 2 creates the structured data environment and integration infrastructure that Tier 3 requires.

Tier 3 Agentic Teams generate Intelligence Capital from day one. Begin with functions where decision quality has the highest financial leverage and where expert judgment is most scarce and most valuable. L4 teams can begin deliberating on manually-processed cases before L2–3 automation is complete. IC generation starts from the moment of deployment, independent of automation maturity.

The critical insight: most consultancies and vendors help organisations move from Tier 1 to Tier 2. The organisations that pull ahead will be those that build Tier 2 and Tier 3 in parallel — so that automation generates Intelligence Capital from the start.

The First-Mover Imperative

Intelligence Capital formation has a specific temporal dynamic that differs from conventional technology adoption. With most technology, waiting for costs to fall or standards to emerge is rational. With IC, waiting is costly — because the value compounds from the start date.

Every month of delay is a month of IC not generated. The organisation that begins in 2026 will have two years of compounding institutional intelligence by 2028 — an advantage a late-mover cannot close, because they lack the accumulated decision history, captured expertise, and cross-functional learning. The gap between early and late movers widens over time, not narrows.

Expert knowledge adds urgency. Senior professionals’ expertise is finite and depreciating — they retire, are promoted, or move to competitors. Capturing their judgment in digital twins before departure creates permanent institutional value. Waiting means the expertise may no longer be available to capture.

Where the Market Stands

The technology is maturing rapidly. The major AI platforms are converging on agent infrastructure, each approaching from a different direction: enterprise agent management, desktop agent productivity, multi-agent orchestration, and agent-to-agent protocols. This convergence validates the architectural thesis of the maturity model and will make it progressively easier to deploy Tier 1 and Tier 2 agentic systems.

But a critical gap persists across all major platforms at the time of writing. None offers genuine multi-perspective deliberation as a native capability. They automate and orchestrate. They do not deliberate, challenge, or produce transparent reasoning records.

A parallel gap exists at the infrastructure layer. No major platform has yet solved cross-boundary agent trust without depending on its own identity infrastructure — creating a structural incentive to keep enterprises locked into proprietary authentication and governance stacks. The vendors' interests and the enterprise's need for portable, interoperable agent identity are not aligned.

This reinforces our central thesis: the capabilities that generate lasting competitive advantage — deliberation, institutional memory, and trusted cross-boundary interaction — are precisely the capabilities that cannot be purchased from a platform vendor.

Several platforms are beginning to articulate language convergent with Intelligence Capital — “durable institutional memory,” “agent workforces,” “persistent identity” — but the gap between marketing language and production capability remains substantial.

This has a specific strategic implication: organisations that wait for major platforms to deliver L4 capability will find themselves with efficient automation and no accumulated Intelligence Capital, while first-movers have been compounding for years. The platforms will eventually deliver elements of L4. By then, the competitive window will have closed.

Current Market Distribution

How the Building Blocks Work

For leaders evaluating maturity claims and investment decisions, a brief understanding of the underlying capabilities is useful.

Large language models are the foundation powering every level. Think of an LLM as a highly capable analyst who has read everything but is locked in a room without internet, calculator, or phone. Vast knowledge, but no ability to access real-time information, perform reliable calculations, or take actions.

Tool integration gives that analyst a phone, calculator, and internet connection — the ability to access databases, execute code, query external systems. Essential for Level 1 and above, but not differentiating.

Advanced reasoning enables models to “think” before acting — breaking problems down, considering trade-offs, adjusting strategies, and showing reasoning transparently. This thinking process can run to thousands of words for complex problems. It is the foundation for genuine deliberation at Level 4.

Complete agent architecture combines four pillars: persona (defined role and expertise), memory (context across interactions and institutional knowledge), planning (advanced problem-solving), and action (tool integration and system execution). This creates digital workers with persistent identity and accumulated expertise.

Agentic teams are the breakthrough that enables Level 4: teams of agents that mirror human committee dynamics with collaborative decision-making, hybrid human-AI structures, and self-improving retrospectives. This is where the six IC-generating characteristics become possible.

Digital twins and expertise encoding are the IC capture mechanism. They replicate specific human experts’ judgment patterns and make them available to the whole organisation permanently. When a senior professional’s twenty years of judgment is encoded in a digital twin, it becomes an appreciating asset rather than a depreciating one that walks out the door at retirement.

The Window

The technology platforms are converging. Within 24 months, the tools for Tier 1 and Tier 2 deployment will be available to every enterprise at commodity prices. The technology itself will not be a differentiator.

The differentiator will be the Intelligence Capital accumulated by the organisations that started building first.

The question for any enterprise leader is not whether to invest in agentic AI — that is no longer optional. The question is whether to generate Intelligence Capital now, or to wait and find that competitors have accumulated an advantage that technology alone cannot close.

Simon Torrance leads AI Risk, advising leaders in knowledge-intensive sectors on agentic AI strategy and implementation. The Agentic AI Maturity Model underpins AI Risk's enterprise transformation methodology. Contact: simon.torrance@ai-risk.co

If this framework is relevant to your leadership team, you're welcome to share it — the more enterprise leaders think about Intelligence Capital, the better the conversation becomes.

[1] The term “Intelligence Capital” was introduced by Professor David Shrier (Imperial College London / University of Oxford), whose work on the economics of AI-generated institutional knowledge provided the foundational stimulus for the framework developed here. See D. Shrier, The Intelligence Capital Manifesto (2025). The application to agentic AI maturity levels, the three-tier investment model, the Coordination Layer architecture, and the operational frameworks are the work of AI Risk.

[2] The application of the GM/Toyota parallel to agentic AI adoption draws on Sangeet Paul Choudary’s analysis of capability-driven versus workforce-driven AI deployment.